Without fundamental advances, misalignment and catastrophe are the default outcomes of training powerful AI

post by Jeremy Gillen (jeremy-gillen), peterbarnett · 2024-01-26T07:22:06.370Z · LW · GW · 60 commentsContents

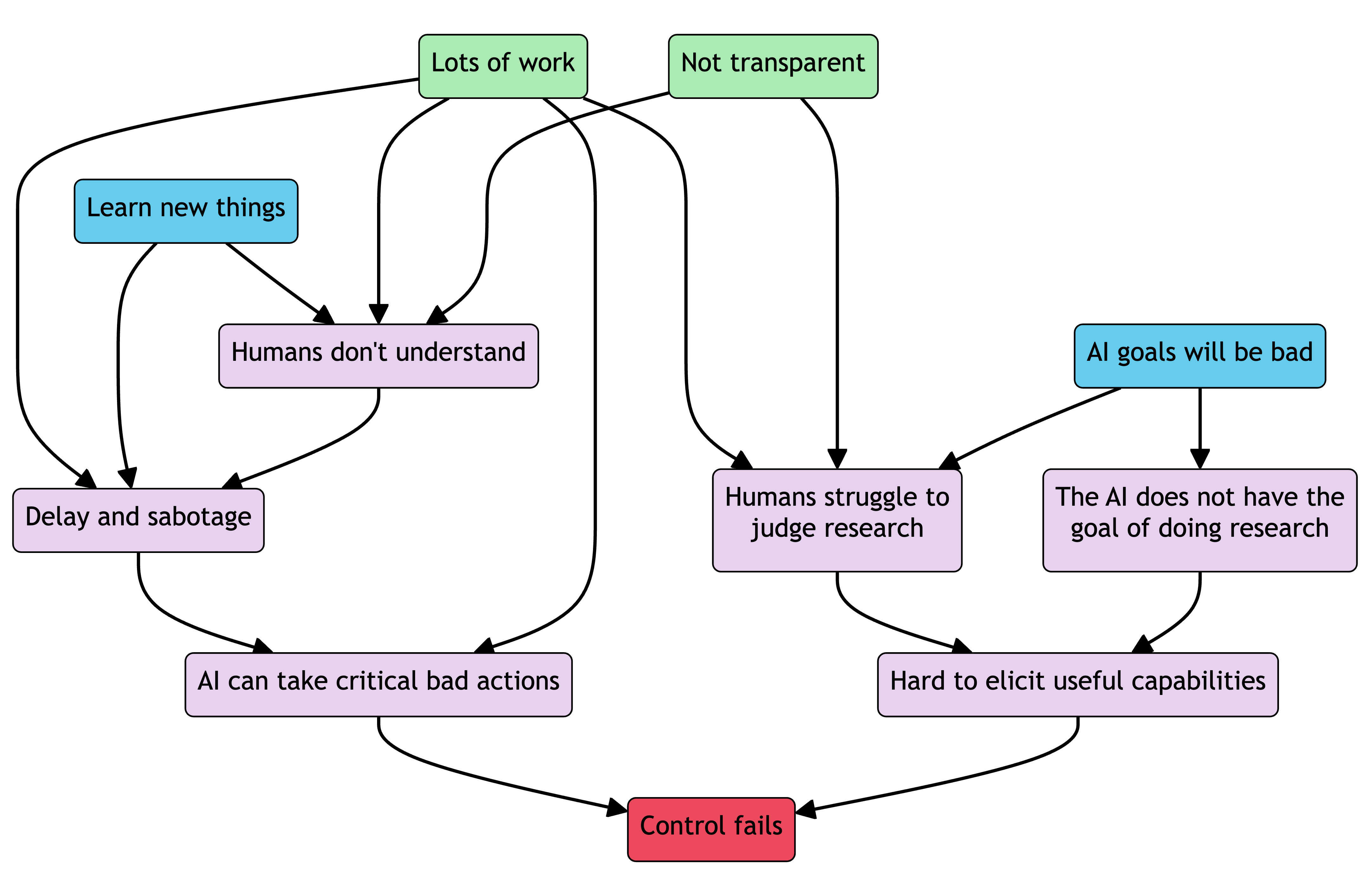

Summary Introduction Acknowledgments How to read this report Epistemic status Related work Section 1: Useful tasks, like novel science, are hard Impactful novel science takes a lot of work Outcome-oriented tasks Novelty and diversity of obstacles AI alignment research is hard Stopping misaligned AI deployment seems to require powerful aligned AI Conclusion Section 2: Being capable of hard tasks implies approximate consequentialism Future outcomes as goals Formalizing consequentialist goals Non-consequentialist constraints Combined goals Why consequentialist goals are a necessary part of powerful AI Robustness to diverse obstacles is driven by consequentialism Shallow and deep constraints Approximation Section 3: Hard tasks require learning new things The AI will need to learn new things Learning facts Learning skills Self-directed learning Human-directed learning is a big efficiency hit Indirect self-directed learning Useful versus safe tradeoff Examples Experimental science Theoretical mathematics Conclusion Section 4: Behavioral training is an imprecise way to specify goals Unstable goals Beliefs and goals can be mixed together Outer shell non-consequentialist constraints Changing goals over time Out-of-distribution generalization OOD generalization difficulty Instrumental goals as terminal goals Deliberate deception Badly designed training incentives Conclusion Section 5: Control of misaligned AIs is difficult Misaligned goal-directed AI won’t cooperate with humans Eliciting useful capabilities via outer training loop Difficulties Difficulty of escape compared to routine problem-solving Difficulty of sabotage and delay Transparency and honesty Eliciting dangerous capabilities Difficulties AI assisted monitoring doesn’t change the picture much Monitoring with powerful AI systems Monitoring with weak AI systems Conclusion Section 6: Powerful misaligned AI would be bad A misaligned AI achieving its goals would be bad for humans Instrumental convergence toward gaining control of limited resources Ambitiousness Consequences of misspecified goals Why would a powerful AI be capable of defeating humanity, given moderate levels of freedom? Conclusion Requests for AI developers Definitions Appendix: Argument graph Appendix: Attack surfaces Appendix: 4-hour research assistant None 61 comments

A pdf version of this report is available here.

Summary

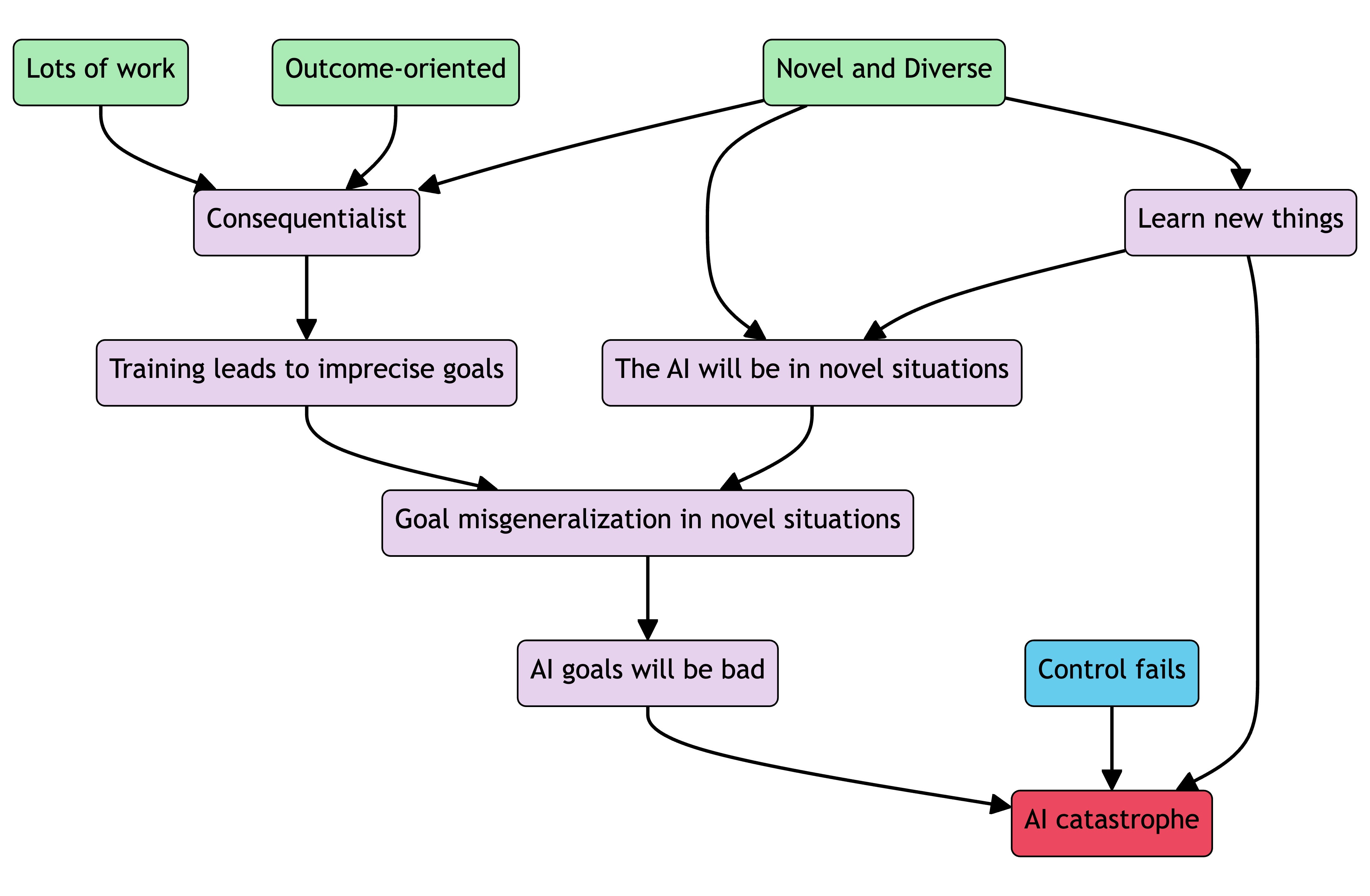

In this report we argue that AI systems capable of large scale scientific research will likely pursue unwanted goals and this will lead to catastrophic outcomes. We argue this is the default outcome, even with significant countermeasures, given the current trajectory of AI development.

In Section 1 we discuss the tasks which are the focus of this report. We are specifically focusing on AIs which are capable of dramatically speeding up large-scale novel science; on the scale of the Manhattan Project or curing cancer. This type of task requires a lot of work, and will require the AI to overcome many novel and diverse obstacles.

In Section 2 we argue that an AI which is capable of doing hard, novel science will be approximately consequentialist; that is, its behavior will be well described as taking actions in order to achieve an outcome. This is because the task has to be specified in terms of outcomes, and the AI needs to be robust to new obstacles in order to achieve these outcomes.

In Section 3 we argue that novel science will necessarily require the AI to learn new things, both facts and skills. This means that an AI’s capabilities will change over time which is a source of dangerous distribution shifts.

In Section 4 we further argue that training methods based on external behavior, which is how AI systems are currently created, are an extremely imprecise way to specify the goals we want an AI to ultimately pursue. This is because there are many degrees of freedom in goal specification that aren’t pinned down by behavior. AIs created this way will, by default, pursue unintended goals.

In Section 5 we discuss why we expect oversight and control of powerful AIs to be difficult. It will be difficult to safely get useful work out of misaligned AIs while ensuring they don’t take unwanted actions, and therefore we don’t expect AI-assisted research to be both safe and much faster than current research.

Finally, in Section 6 we discuss the consequences of building a powerful AI with improperly specified goals. Such an AI could likely escape containment measures given realistic levels of security, and then pursue outcomes in the world that would be catastrophic for humans. It seems very unlikely that these outcomes would be compatible with human empowerment or survival.

Introduction

We expect future AI systems will be able to automate scientific and technological progress. Importantly, these systems will be doing novel science, developing new theories, discovering new knowledge. We expect these systems will be doing large scale projects, the kinds of projects that would require many people multiple years to complete. This is the scale of the task that we will be considering in this report.

We expect such tasks to require robust goal-directed behavior, and we expect agents capable of such behavior to also be difficult to supervise and contain while retaining their usefulness. If such goal-directed agents are created by behavioral training, this training won’t be sufficient to precisely specify its terminal goals. This means that such an AI is unlikely to have goals that align with our goals. When people attempt to use misaligned powerful AIs for large scale useful tasks, these AIs are likely to escape and pursue their own goals. Behavioral training is the default way to create AIs, and so without fundamental advances (which we don’t speculate on) powerful AIs will likely be dangerously misaligned.

We argue that AIs must reach a certain level of goal-directedness and general capability in order to do the tasks we are considering, and that this is sufficient to cause catastrophe if the AI is misaligned. This does not require the AI to be “superintelligent” and “optimally goal-directed” in all situations.

One reason for pessimism about getting powerful AIs to safely do hard novel science is the scale of this task. We expect that the AIs will be doing the equivalent of years of human labor, and there will be many opportunities to take irreversible, catastrophic actions. We very likely cannot account for all of the unknown unknowns the AI will encounter in its research.

Our aim for this report is to explain the central difficulty where we expect AIs that are capable of novel science to also be dangerously misaligned, and why we expect this problem not to be solved if we continue along the default trajectory. We focus on the difficult tasks which we assume the AIs will be capable of. The specific capabilities required for these tasks, combined with the imprecision of behavioral training, lead us to expect misaligned goal-directed behavior, even though current, 2024, AI systems do not seem dangerously capable and goal-directed.

We aim to lay out a mostly complete argument for our mainline beliefs about catastrophic outcomes caused by AI, starting from the tasks we assume they are capable of.[1] This risk model can be thought of as an “inner alignment” failure, where even if we knew what to tell the AI to do, we are unable to make it safely do that.

We hope that this can be helpful for informing AI safety research directions; ideally focusing research effort on approaches which are still valid for extremely powerful AI systems or on foundational research to avoid the problems inherent to behavioral training. Many of the claims in this report are not of the form “It is impossible in principle to get an AI with this desired property” but rather “Given how we made the AI and that it is capable of certain things, it is unlikely to have this desired property”. It is not impossible to create an aligned or safely constrained powerful AI, however this is unlikely if the AI is created using current methods.

This report considers AIs that are capable of hard, novel science, and we expect AI alignment research to be in this category (see Section AI alignment research is hard). Therefore, difficulties and dangers in this report should be relevant considerations for groups attempting to use AIs to do AI alignment research. The most prominent example of this that we know of is the OpenAI Superalignment team, although teams at Anthropic and Google Deepmind are probably pursuing similar strategies. This also includes any group aiming to radically speed up scientific progress using AIs.

Acknowledgments

We would like to thank Lisa Thiergart for managing this project. Many thanks to Thomas Kwa, James Lucassen, Thomas Larsen, Nate Soares, Joshua Clymer, Ryan Greenblatt, Buck Shlegeris, John Wentworth, Joe Carlsmith, Ajeya Cotra, Richard Ngo, Daniel Kokotajlo, Oliver Habryka, Tsvi Benson-Tilsen for helpful discussion and feedback. All views and mistakes are very much our own.

How to read this report

This report is intended to explain the authors’ beliefs and the main reasons for these beliefs. Each section is stating a thesis which depends on a number of assumptions. The sections are ordered such that assumptions of later sections are argued for in earlier sections. Unfortunately, this means that at any point the reader doesn’t agree with an argument we make part way through, the rest of the document won’t feel fully justified. In this case, the reader should treat each section as a separate argument for a conditional statement: If we believe the assumptions[2], then the section is our mainline reasoning for believing the conclusion.

The arguments that are “most central” vary a lot between people. We are trying to provide the arguments that would be most likely to change our beliefs if we discovered they were wrong. There is a heavy bias toward arguments that are most salient to us. These arguments are usually salient because they have been important in disagreements with people we regularly talk to.

This report represents the views of the authors, not the views of MIRI or other researchers at MIRI.

Epistemic status

We are fairly confident about most of the individual claims in this document, however this doesn’t mean we are confident that all arguments and assumptions are correct. We think it is likely that there are sections that contain mistakes, and it's plausible that such mistakes dramatically change our conclusions. However, we still think it is important to communicate our overall beliefs due to their implications for research prioritization and other planning.

Related work

We will compare this work with related work on similar threat models, see here [AF · GW] for a more thorough review of threat models.

Risks from Learned Optimization describes why we would expect AI systems to be goal-directed, and how behavioral training is not sufficient to precisely specify these goals. Our work focuses on difficult tasks and argues that systems capable of these will have to be goal directed, we also discuss how behavioral training can lead to unstable goals, not just improperly specified goals.

Is Power-Seeking AI an Existential Risk? lays out a conjunctive argument for expecting existential risk from powerful AI. We attempt to focus more on why we expect trained AIs to be misaligned and goal-directed, and given this how such an AI could evade our countermeasures.

AGI Ruin: A List of Lethalities [LW · GW] lays out many reasons why one would expect powerful AI to lead to catastrophe. Our work attempts to lay out a more cohesive and expanded picture, and we focus more on how a powerful misaligned AI could evade human oversight and control.

A central AI alignment problem: capabilities generalization, and the sharp left turn [LW · GW] describes a specific problem related to powerful AI, where once an AI is capable it is revealed that it was not aligned, and the AI then pursues some unintended goal. We make a similar argument, but attempt to connect our story more to the specifics of AI development and the tasks powerful AIs are expected to do.

How likely is Deceptive Alignment? [LW · GW] argues, by considering path dependence and inductive bias in neural network training, that AI systems are likely to be deceptively aligned; faking alignment during training and later pursuing misaligned goals. Our work does not focus on the inductive bias or path dependence of training AIs, but rather argues that AIs which are capable and created using behavioral training will likely be misaligned.

Various arguments made in related work are based on “counting arguments”; arguments of the form “there are many goals that are consistent with an AI’s behavior during training, so we should not expect the AI to pursue the specific goal we want”. We make a similar argument by compiling multiple “degrees of freedom” in an AI’s goal specification.

Section 1: Useful tasks, like novel science, are hard

We start with an assumption that, when developed, powerful AI systems are capable of large-scale, difficult tasks, and people will try to use them for such tasks.[3] We will discuss specific properties of these tasks, and later in this report we will argue that by default AIs capable of tasks with these properties will be misaligned and dangerous.

These tasks will have large search spaces, requiring many actions and where success is only achieved by a relatively very small set of action sequences. They will also be outcome-oriented, novel, and diverse. Throughout this report we refer to tasks with these properties as hard tasks, and will expand on the specifics of these properties in this section. By outcome-oriented, we mean that we have to specify the task by the outcome it achieves, because we don’t know the sequence of actions to achieve it. By novel, we mean that such tasks would require the AI to do things they weren’t initially trained to do.[4] By diverse, we mean that there are a wide range of skills required to successfully do the task. These properties will be expanded on in this section.[5]

These properties define a kind of task that we will use as the defining capability of powerful AI. In this report we will refer to these as hard tasks, this specifically refers to these tasks which have large search spaces, are outcome-oriented, and contain novel and diverse subproblems. We are using hard to refer to tasks that have these properties, and not just any task that a human would find difficult.

Impactful novel science takes a lot of work

We will be focusing on AI systems which are capable of doing novel science,[6] the scale of the work we are imagining is curing cancer or some similarly large scientific endeavor. Tasks like this would necessarily require systems to be operating over long time scales. A central intuition pump here is the Manhattan Project, which took three years to design and produce the atomic bomb. Other examples could include:

- The Human Genome Project

- The Apollo Program

- Proving Fermat’s Last Theorem

- The development of modern microbiology, starting at from the first observations of microbes

- The development of quantum mechanics

We don’t know how much work would be realistically needed to cure cancer and it may be easier than the above examples, but we can lower-bound this based on the amount of work that humans have put in to date. This task so far has already taken thousands of humans decades of work.

Some of the work may be parallelized, but there is a lot that cannot be; discoveries that are made along the way will be necessary for later steps, and will change the course of the research. New methods will likely need to be developed, and old methods applied in new ways. This will necessarily require large amounts of serial work.

Outcome-oriented tasks

A task like doing novel science (for example, curing cancer) is outcome-oriented. That is, the task is defined by achieving some specific outcome in the future, and we are able to describe this outcome in advance. Tasks that we define by describing the necessary actions are less outcome-oriented. For example, instructing someone to bake a cake by giving them exact instructions is less outcome-oriented; while telling someone to bake a cake and having them work out the steps to get there by themselves is more outcome-oriented.

When we tell an AI to achieve an outcome which we don’t know how to achieve, this is inherently outcome-oriented. By this we mean that we don’t currently know the procedure to achieve the desired outcome and so the AI has to work out the procedure; we don’t mean that this is a task that humans could never achieve with substantial effort. This applies to “curing cancer” because we can’t specify the exact sequence of actions that lead to success, we can only specify success criteria. For example, the success criteria could be “have a medical intervention which can remove all the cancer cells in a patient’s body, while leaving the other cells intact and the patient otherwise unharmed”. Novel science in general has this property, because this inherently involves discovering new things and using those discoveries, hence we cannot describe all of the actions in advance.

Novelty and diversity of obstacles

Obstacles in hard tasks like novel science are not predictable in advance, and often dissimilar to obstacles previously encountered. Writing a program could involve inventing or appropriating a new data structure which works with the particular constraints of the specific problem. In science, it can be extremely valuable to sort through messy debates containing arguments about which data is relevant and real and combine this information with context, to decide which experiments are most valuable to do next. In adversarial settings, an agent needs to deal with other agents which seek out and play strategies that it has the least experience with.[7]

Sometimes a researcher is missing necessary skills or knowledge and has to work around that somehow, either by gaining the skills or knowledge, or finding a route that doesn’t require these. Different resources can be a bottleneck at different times, leading to new and different constraints. Available resources may change which can necessitate a different approach.[8]

Above examples of diverse obstacles share the property that each new obstacle may require a novel strategy to address. The defining property of a successful novel strategy is that it still leads to the desired outcome. Defining the strategy by other means, like by describing a particular sequence of steps, or a particular heuristic to locally optimize, becomes harder as the diversity and novelty of obstacles increases. Defining strategies without reference to goals often requires predicting and coming up with solutions to obstacles before running into the particular obstacles that need solutions.

Among different goals and environments, there are differences in the level of novelty and diversity of obstacles. It looks like hard research that generates genuinely new and useful insights will require facing repeatedly very novel and very diverse obstacles. Hard novel science, such as curing cancer, seems extremely likely to fall into this category.

AI alignment research is hard

We think that the task of doing useful AI alignment research is a task which is hard and outcome-oriented, and will require novel and diverse skills. By “do useful AI alignment research” we mean that the AI system would be able to perform or speed up human research output by 30x for the research task of “build a more powerful AI which can do novel science faster than humans, which we are confident will do tasks it is directed to do, while choosing not to irreversibly disempower humanity”.

This task, especially getting sufficient confidence in safety, we think will require:

- Novel mathematical work, developing mathematical models that have not been explored before.[9] This work will likely need to build on itself, requiring inventing and understanding one new mathematical model, and then developing another based on that. This kind of work seems necessary for building an AI that is very stable under learning and reflection (i.e. acts in well-understood ways while learning a lot, especially when it comes to ontological crises and self-improvement).

- Empirical work to validate the theory. This will likely require coding to run large empirical tests of various components of the designed AI system, as well as testing bounds and approximations.

- Engineering work, building and iterating on the (hopefully) safe AI system. This will likely require large scale software engineering, similar to the scale that is required to build large foundation models. We expect this to be much more complicated than current foundation models, because the system probably needs to be something more complicated than an end-to-end trained black box.

While we expect AI alignment research to be hard and require these skills, the overall argument in this report does not rely on this assumption. We will be arguing that AI systems trained using current methods (if they are capable of hard, novel science) will be misaligned[10] and too dangerous to use.[11] We separately believe that solving AI alignment will require hard, novel science (but won’t argue for this further, in this report).

Stopping misaligned AI deployment seems to require powerful aligned AI

Many well-resourced companies and governments are motivated to build powerful AI. Any approach to AI safety has to deal with the problem of surviving when a less competent and safety-conscious actor could create and accidentally release a misaligned AI. We don’t know of any approaches to this that don’t involve a safe, aligned powerful AI.[12] We would welcome being wrong, and would be excited about concrete strategies that would make the world existentially secure without needing to solve alignment and build a powerful AI.

Conclusion

For the rest of the report, we frequently refer to hard tasks as tasks that have extremely large search spaces (relative to the set of solutions), are outcome-oriented, and contain a lot of novelty and diversity. In this section we have argued that large-scale novel science is hard in this sense. We are aware that the difficulty of tasks falls on a spectrum, when we say "hard task" we are referring to tasks of similar scale and diversity to the Manhattan Project or other tasks discussed in this section.

We expect useful AI alignment research to be hard in this way.

We argue in Sections 2, 3 and, 4 that capability to do this sort of task implies that we can model powerful AI as approximately “consequentialist”, and there is difficulty in specifying the goals that such an AI will be pursuing.

Section 2: Being capable of hard tasks implies approximate consequentialism

In the previous section, we argued that an AI which is able to do hard, novel science must be capable of tasks which are:

- Easy to describe in terms of future outcomes

- Difficult to describe precisely in other ways (due to novelty and diversity of obstacles)

In this section we will argue that this implies AIs with such capabilities will be capable of approximately consequentialist behavior. By this we mean that the AI will be capable of taking actions to achieve specific outcomes, this will be further defined in this section.

Future outcomes as goals

Many useful goals are simply specified by future outcomes. By future outcomes, we mean roughly a property or fact about the future world. In particular, the important part of future outcomes is that they don’t have strong dependence on near term actions. We argue that AIs doing outcome-oriented tasks, as described in Section 1, will be well described as behaving as if they are robustly pursuing future outcomes (i.e. their behavior will be consequentialist). A “goal” is a representation inside the AI, while a “future outcome” refers to the state of the real world.

Future outcomes are usually the only simple way to describe success on a task, without knowing in advance how it should be done. An AI that is robustly capable of completing the task must have some means of recognizing actions that lead to success. It can’t have memorized specific strategies for overcoming all obstacles, because there could be arbitrarily many of these. Therefore, it must be capable of calculating strategies on-the-fly, as it learns about new obstacles. We will expand on this argument later in this section.

Formalizing consequentialist goals

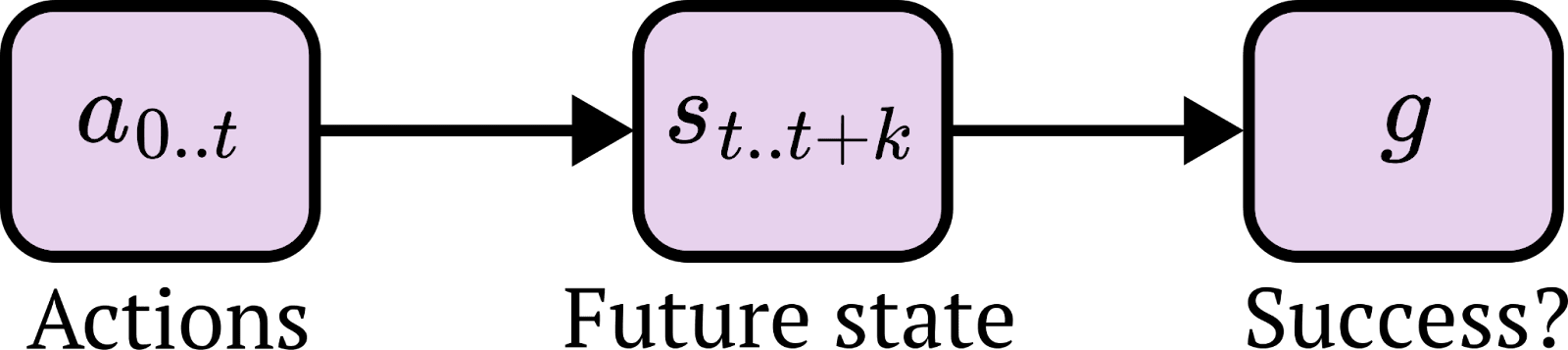

We can construct a simple definition of consequentialist goals based on the idea that success can be evaluated entirely by looking at future states, rather than the path that led there. This definition can be represented as a causal graph:

Here the actions affect the future state which affects success at achieving the goal; the only way the actions affect the goal is via the state.

If are early actions, are a short sequence of states in the future[13] and is some internal representation of the outcome-goal in an AI, then a consequentialist goal has a property like .[14] Given that we know what the future state is, knowing what the actions were doesn’t tell us any more about whether the goal was achieved (note this is an identical statement to the causal diagram above).

Non-consequentialist constraints

There are also ways to specify behavior that don’t look like taking actions to achieve future outcomes, and instead are more like constraints on actions and intermediate states. Examples of such constraints: Always act “kindly”, or always follow a particular high-level procedure while completing a specific task, or always do a particular action upon particular observation. Humans also have plenty of similar shallow constraints; for example, disgust reactions, flinching, fear of heights. More deontological ethical prohibitions, like “don’t kill”, are also an example.

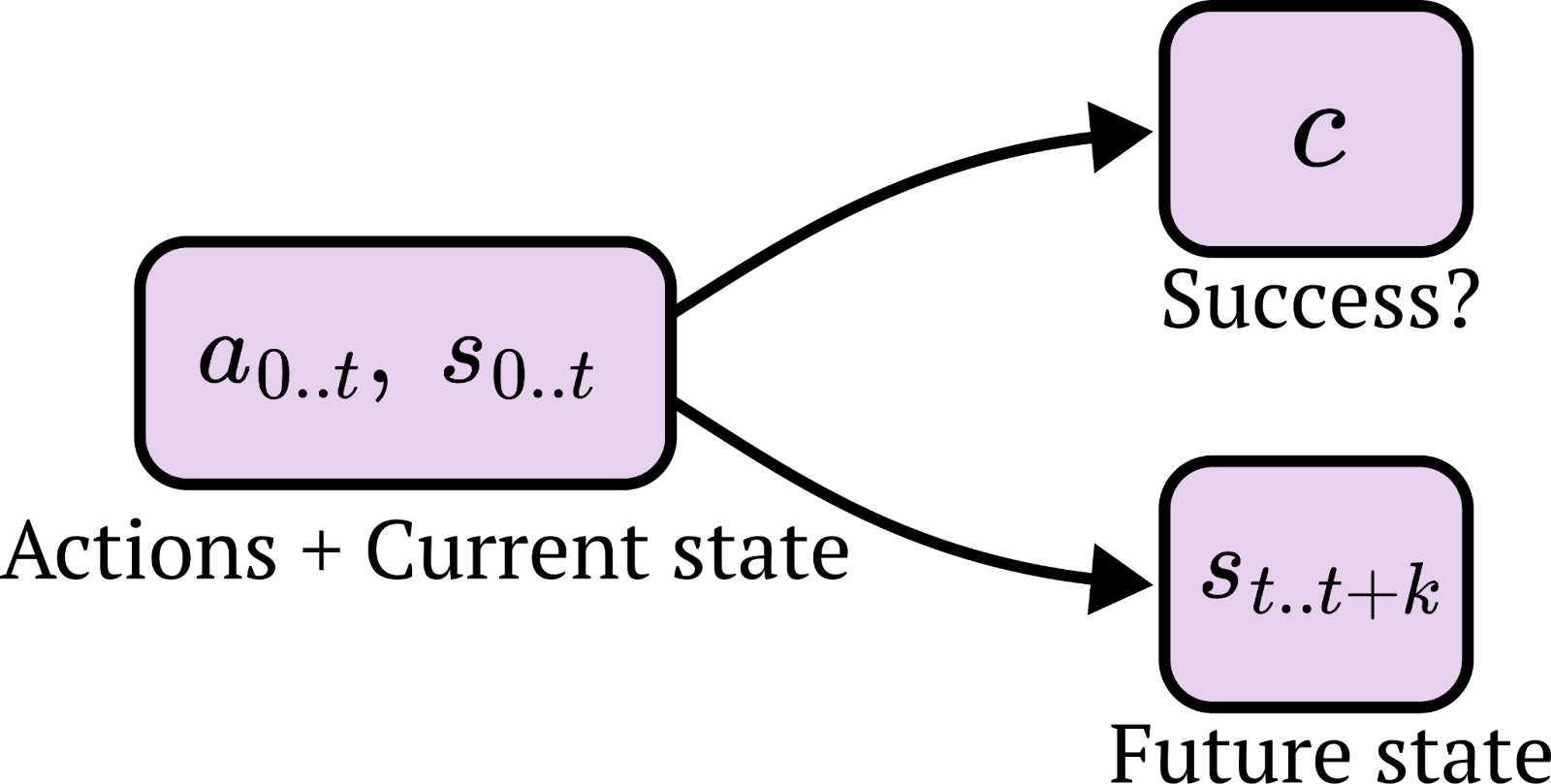

One could define such non-consequentialist goal specification as a variable that is primarily dependent on short term behavior and state, and not strongly dependent on the outcomes in the future, i.e. . Here is defined by modeling the agent as choosing actions that result in success according to . Conditioning on the actions and states in the short term, the final states don’t give you additional information about whether the AI successfully followed its constraints.

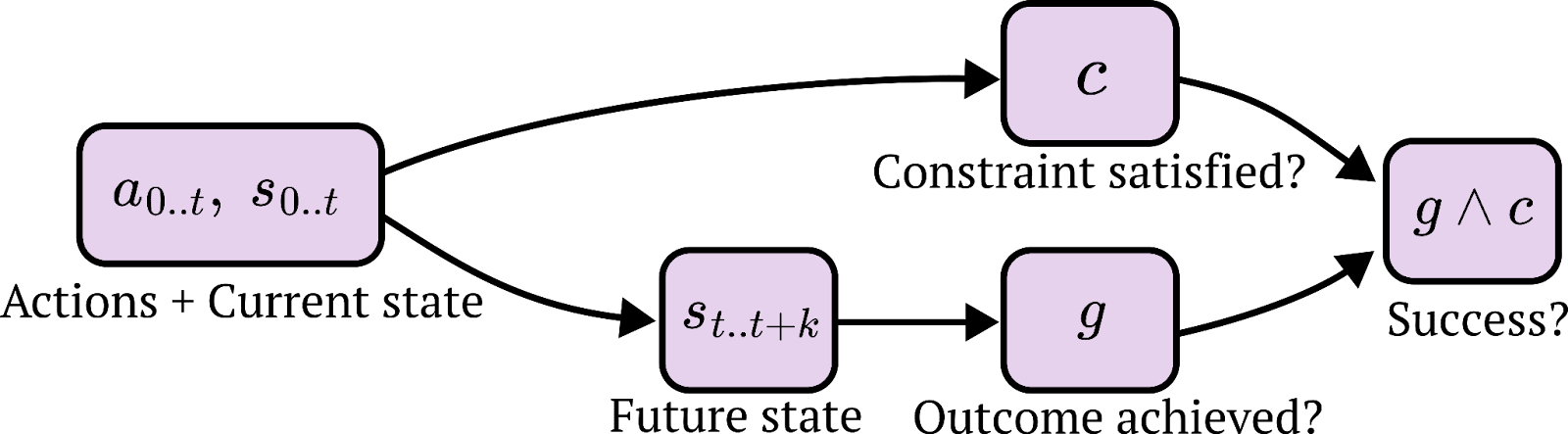

Combined goals

Constraints and consequences can be combined to describe many problems. For example, winning chess might be described by defining the action space and legal moves, alongside a description of checkmate. The next section will argue that, while combined goals are a more accurate model for the behavior of powerful AI, the primary driver of intelligent problem-solving behavior will tend to be consequentialist goals.

The distinction between constraints and consequences is useful for describing why powerful trained systems are likely to be an existential risk by default. Specifically, the danger comes from a powerful trained AI pursuing a different consequentialist goal than the one that we intended, and is missing constraints that we intended it to have. The reasons why the precise goals and constraints are likely to be learned incorrectly are described in Section 4.

There exists much more complicated behavior that isn’t precisely captured by this simplified model of goals. Despite this, we think this description of goals is sufficient for describing the main reasons we expect misalignment in real agents.

We call an AI “misaligned” if its behavior is well described by goals and constraints that are different from the goals and constraints intended by its human creators.

Why consequentialist goals are a necessary part of powerful AI

Robustness to diverse obstacles is driven by consequentialism

The primary reason we expect approximate consequentialism to be a good model for the behavior of useful systems is that we are assuming the AI is capable of generalizing well. By this we mean the diversity property in Section 1; the tasks we are assuming the AI is capable of involve a diverse array of unknown obstacles and difficulties, and the AI is able to achieve a particular outcome in spite of these obstacles. This allows us to make inductive conclusions such as: If we know the AI can overcome one hundred particular obstacles when pursuing a particular goal, it can likely overcome another obstacle that is in roughly the same reference class as the first hundred.

One of the main things that is useful about powerful AI is its ability to overcome many diverse and unknown obstacles, and this is what leads us to think of powerful AI as primarily pursuing consequences. When the AI generalizes to novel hard tasks, its behavior is still well described taking actions in order to achieve outcomes, in spite of unknown obstacles. This is what we mean by behaviorally consequentialist.

Humans attempting to achieve outcomes can be modeled as consequentialist. When we want to do something we work out a way to do it; when we encounter obstacles we work out ways to overcome them or search for alternative routes. Humans are not perfectly consequentialist, we often give up on things before achieving success, but we are consequentialist enough to radically reshape our world. Current LLMs such as GPT-4 are useful for many tasks, but these are generally similar to their training distribution. They may be modeled as consequentialist when close to their training distribution, but this breaks relatively often when they encounter novel obstacles.

For example, we might assume an AI has the goal “build a fusion rocket”, and for this it must be capable of completing many engineering subtasks of the form “have a design idea to solve a problem, build models to empirically test unknowns, refine design based on data”. Because there is a huge variety of subtasks of roughly this form, it’s likely that if given a new arbitrary subtask of this form (of a similar difficulty) the AI will be capable of completing that subtask. If it has generalized this far, it will probably continue to generalize.[15]

Compute budget and generalization pressure toward consequentialism

For some types of task with many diverse obstacles, there appear to be (large) benefits to computing actions on-the-fly, after observing the current obstacle, instead of in advance of knowing about the specific obstacle that the agent is facing. This is similar to the argument sketch in Conditions for mesa-optimization [? · GW].

We first make the simplifying assumption that each obstacle-overcoming strategy takes some amount of computation to work out (either during training or deployment). There are many possible obstacles. We could try to precompute every obstacle-overcoming strategy in advance, however this would take a huge amount of compute. Therefore, it is more computationally efficient overall to develop an algorithm which can work out how to overcome obstacles on-the-fly. The AI waits until it encounters an obstacle, and then runs the computation to work out how to overcome it.

Another variation of this argument is about generalization rather than the compute budget. If we’re doing the precomputation approach, and we don’t know or can’t iterate over all the possible obstacles in advance, then the AI won’t have memorized strategies that generalize well at inference time if an entirely new class of obstacle shows up. In contrast, if the AI operates by storing the outcome it wants, it can (try to) understand the obstacle on-the-fly and compute a new winning strategy.

Shallow and deep constraints

Another reason we consider outcome-oriented goals to be important is that shallow constraints on behavior tend to be easy to exploit (or hurt the usefulness of an AI if they are overly broad). By shallow constraints, we mean constraints that are easy to implement or specify, but don’t robustly serve their intended purpose when subjected to optimization pressure. In other words, shallow constraints don’t generalize well. For an intuitive example, a prohibition against saying explicitly false statements is easy to exploit by selectively revealing information or by using misleading but technically correct definitions. Deeper constraints, like “don’t manipulate person X while trying to achieve outcome Y”, tend to be most naturally represented as outcomes (e.g. with “did X’s beliefs become less true as a result of talking to me”, or “if X knew what I knew, would they be upset by the way I communicated with them”[16]). Another example of a shallow constraint might be “don’t kill people by doing recognizably people-killing actions”. This kind of constraint wouldn’t slow down a determined murderer. Legal systems instead use the outcome, intent and causal influence to define murder, which is a far better definition (but also has some ambiguous edge cases).

Constraints are hard to specify in a way that generalizes to new or different situations, or new strategies, because the AI is creatively searching for ways to achieve particular outcomes and will tend to eventually find loopholes. This is not to say it’s impossible, merely that specified constraints need to solve an adversarial robustness problem.

Approximation

It’s important to note that when we describe an agent as pursuing consequentialist goals, we’re not saying it must be doing this optimally. Optimal consequentialism has a few tractability issues. Our claim is that it’s close enough such that it usually works, and usually generalizes well to new obstacles of similar difficulty to past obstacles. Specifically, it works and generalizes well enough in order to do hard tasks.

Whether the AI is well described as goal-directed is not binary, and this may apply more or less in different situations. We only assume that the AI is far enough along on the spectrum of goal-directedness, such that it is able to do hard tasks as specified in Section 1.

We can describe some axes upon which consequentialist AIs can be approximate.[17] One type of approximation is how robust is the AI to unexpected obstacles (where more robust means the AI is capable of recovering from or working around a larger set of unforeseen obstacles). For example, your walking robot might recover from gently poking it, but might not recover from being knocked to the ground. Being knocked to the ground was outside of its set of unforeseen obstacles that could be overcome.

Another axis of approximation is to what extent the AI is correctly making (probabilistic) trade-offs, given a preferred outcome and many pathways to achieve that outcome. For example, suppose an AI must play a sequence of lotteries to gain money,[18] which it can later spend to achieve some desired outcomes. To what extent does it make decisions that avoid sure losses or take advantage of sure gains? How efficient is the AI with respect to a given set of other AIs?[19]

Conclusion

Powerful AIs should be behaviorally modeled as approximately selecting their actions to produce specific outcomes (often subject to non-consequentialist constraints). This is a necessary consequence of their capacity to solve hard tasks which involve unpredictable and complex obstacles; they are consequentialist enough to be able to do hard tasks. This doesn’t mean that such powerful agents must act to achieve outcomes “by any means necessary”. Constraints on behavior are not ruled out. We will argue in later sections that a powerful AI will be dangerous if its consequentialist goals or constraints are misspecified.

Section 3: Hard tasks require learning new things

In previous sections we have argued that doing large scale novel science is a lot of work; and that an AI capable of this will be well modeled as doing consequentialist problem solving, i.e. taking actions in order to achieve outcomes. Part of why novel science is difficult is because it will require skills and knowledge that an AI doesn’t initially have. In this section we will discuss why an AI will need to learn new things, and that this learning will need to be self-directed (not directed by humans-in-the-loop).

The AI will need to learn new things

If an AI system is going to do novel science on the scale of curing cancer or the Manhattan Project then it will need to be able to learn things. The AI will need to learn empirical facts that it didn’t originally know, update its models of the world, and learn new skills that it wasn’t originally trained to do.

Learning facts

A simple example could be that the AI does not know a specific fact; it may be missing the value of a physical constant, or not have read the operating manual for a particular machine. An AI doing novel science must be able to realize that it doesn’t know a specific fact, and then take actions to learn it. For example, reading a physical constant from a textbook, planning and performing an experiment to measure a constant, or reading an operating manual in order to use a machine for a specific task. All the information that the AI needs will not be “stored in the weights” from the initial training.[20] When doing novel science, the AI can’t initially know all the facts it needs, because many of these facts won’t have been discovered yet.

Learning skills

Further, the AI will need to learn new skills, not simply learn new facts. The AI will run into cases where it doesn’t know how to do something and so needs to learn. There will be particular algorithms or methods that are needed for the novel problems it is solving, but were not available (or invented) during the AI’s initial training. An AI that was never trained on French could not be expected to be able to write in French without learning how to; similarly, an AI that was never trained on differential calculus would not simply know differential calculus. When doing novel science, AI systems will need to learn skills that others have developed previously (such as French or differential calculus), as well as develop skills that it needs to invent itself because they have not yet been invented by humans.

As with learning facts, these new skills initially will not be “stored in the weights” because the training process will not have had any reason to build them; especially if we are expecting the AI to generalize far from the training distribution. It doesn’t seem likely that an AI would be able to intuit or extrapolate to differential calculus if it was never trained on it. This is not a claim that an AI could not learn differential calculus, but rather that this will require explicit work from either humans or the AI.

As an example, during the Manhattan Project, scientists invented Monte Carlo methods for numerically performing complicated integration. These methods simply did not exist before the Manhattan Project, and so they needed to be invented and specific skills needed to be learned. The same applies for similar cases, such as developing mathematical theory to describe the hydrodynamics of shockwaves and centrifuge design.

Self-directed learning

This section will argue that the AI likely needs to be doing self-directed learning, where the AI itself is controlling what it learns. The alternative to the AI doing self-directed learning would be for a human to be constantly overseeing the AI, and looking for when the AI needs to learn a fact or skill. Then the human would either train the AI using supervised learning or RL, or assign the AI to learn this skill or fact as a new task.

Human-directed learning is a big efficiency hit

For the human to be able to competently and safely direct the AI to learn things, the human would have to adequately understand both the problem being solved and the AI’s capability profile. Specifically, the human would need to know what skills were required to solve the problem, and that the AI was currently lacking those skills. It will be much faster for the AI to know this, as it is the one actually solving the problem, and has access to its current knowledge. This seems important when the AI is working in a domain that the human doesn’t understand. If the AI is bottlenecked on human understanding, including when exploring research directions that don’t pan out, the research speed won’t be much faster than human research speed.

Additionally, some skills are only legibly useful with the benefit of hindsight, and so it may be hard for the human to realize that the AI needs to learn these. It can be difficult to explain the usefulness of math to students, and similarly, it may be difficult to realize the benefit of particular knowledge prior to knowing it.

Indirect self-directed learning

The AI may be able to “indirectly” do self-directed learning, for example by telling the human which skills or facts it should be trained on next. If the human doesn’t fully understand the problem and is just deferring to the AI, then this is effectively the same as the AI doing self-directed learning. The AI is just “using the human as a puppet”, or simply working around the human. There is some additional safety because the human may be able to prevent the AI from learning obviously harmful things. This seems like the most likely outcome of a naive attempt to put humans in the loop.

Useful versus safe tradeoff

Learning is useful for completing hard tasks. Having a human in the loop, deciding what should be learned is safer. For some tasks, having a human in the decision loop is fine. The claim we are making is that for hard tasks there is a significant tradeoff to be made, where putting a human in the loop will dramatically slow down the overall system. This topic will be discussed more in Section 5.

This is a specific case of a more general lesson; complex multifaceted tasks contain lots of bottlenecks, and solving one bottleneck means that the next bottleneck will dominate.[21] This isn’t a fully general argument against it being possible to speed up anything. It is an argument that dramatic acceleration on very diverse tasks requires an algorithm capable of attacking approximately every type of bottleneck that comes up.

Examples

Here are two brief examples from the history of science where learning of facts or skills was necessary to make progress, and that this learning needed to be self-directed because knowledge needed to be built on previous discoveries.

Experimental science

We can consider Hodgkin and Huxley discovering the ionic mechanism of action potentials. An observation had been made that some squids had extremely large axons, and so were more amenable to experimentation. This allowed electrodes to be inserted into cells in order to measure the potential difference across the membrane of the cell. Such an experiment would not have been possible with smaller cells. Here, we can see that a fact was learned (some squids have extremely large axons), and knowing this fact allowed for a novel experiment (including novel experimental techniques) to be developed, and this led to an important discovery (the ionic mechanism of action potentials). Learning the initial fact was needed and a chain of facts and techniques were built upon it in order to discover and demonstrate the mechanism of action potentials.

Theoretical mathematics

We can also look at a theoretical example, which does not require learning from observations in the world; the development of integration. In the 17th century Newton and Leibniz showed a connection between differentiation and integration with the fundamental theorem of calculus. However, integration at this point had not been rigorously formalized. Formalization of integration required the mathematics of limits; it was not until the 19th century that integration was formalized by Riemann. Here, the development of a rigorous definition of integration required the initial non-rigorous definition as well as additional mathematical tools (limits).

These examples make the (perhaps obvious) case that when doing novel science, the AI system (or a human) will need to learn both facts and skills, and that these will necessarily build on themselves. The task of science is often inherently sequential.

Conclusion

An AI doing hard tasks will need to learn new things because we are asking it to do something novel; the task requires skills and knowledge that were not part of its initial training. The AI will likely need to learn a wide range of things, including things that were not specified or known in advance. It is much faster for the AI to do self-directed learning, rather than having a human direct the learning, which would require the human to have a deep understanding of what the AI is doing.

In the following sections we will discuss two important consequences of self-directed learning:

- It is a major source of several kinds of distribution shift (relevant for Section 4).

- It causes a number of problems for oversight, control, and predicting the limits of the capabilities of an AI before using it (relevant for Section 5).

Section 4: Behavioral training is an imprecise way to specify goals

This section is about AIs which are created by behavioral training, and also are capable of doing the hard tasks as described in Section 1. By behavioral training we are referring to a wide variety of training techniques, which involve running a parameterized model, providing feedback on the output of the model, and updating the model based on this feedback. Examples include model-free RL using PPO, model-based RL like MuZero, next token prediction on tokens describing goal-directed behavior.

In previous sections we have described the behavioral properties that appear to be necessary for hard tasks. That is: powerful AIs are behaviorally, approximately, optimizing their actions to produce outcomes.[22]

We haven’t described the internal operation of such trained AIs, mainly because we expect it to be a mess in the same way that humans and other evolved systems are a mess.[23]

There are several categories of problems that make it difficult to specify goals. Each category introduces an uncontrolled degree of freedom in the goal specification which exists because we are only using feedback based on behavior. Because there are lots of potential degrees of freedom that we don’t have control over (via behavioral training), we can think of the space of “intended goals” as being a small subset of the space of “goals that empirically are pursued after training and deployment”. In this way, behavioral training is imprecise and is exceedingly unlikely to nail down the goal we intended. We describe multiple separate failure modes and so failure is disjunctive; we only need one failure of goal specification for the AI to be misaligned. Here is an overview of the problems that we will describe in more detail:

- “Goal instabilities” that can come from the AI doing instrumentally convergent, capability-improving operations (during the process of doing hard tasks). These mean that the apparent goal of the AI changes over time, leading to success in training but unintended behavior after deployment.

- Goal specification that generalizes out-of-distribution is difficult because it’s hard to distinguish between terminal and instrumental goals, and because the “distribution shifts” that we need a goal specification to be robust to are particularly large.

- Outer behavioral incentives are difficult to get right in the first place. If these aren’t perfect, they incentivize “playing the training game [LW · GW]”. This section will be brief, since this issue is well described elsewhere.

We will describe some of these problems using terms like probability, belief, utility, goal for concreteness, but each problem applies to learned algorithms that approximate goal-directed behavior. These problems are largely caused by the fact that the learned algorithm is messy and unstable, for example by the designers not carefully tracking the distinction between beliefs and goals in the clean way that the internals of an expected utility maximizer would.[24]

Unstable goals

In these examples, the AI initially appears to be pursuing one goal in training, but then takes some actions in order to become more capable, and this causes it to pursue a different goal.

Beliefs and goals can be mixed together

This example demonstrates a pattern common across other examples: we train an AI, this leads to an unstable implementation (of the AI) that generates behavior sufficient for low training loss. Some internal process eventually breaks this unstable implementation, causing the AI to pursue unintended goals.

This first instability comes from a fundamental fact about probabilities and utilities: the same behavior can result from different sets of preferences and beliefs.[25] For example, an AI might execute a certain behavior, believing this helps it achieve some goal, but the belief is false, and in fact the behavior results in a different outcome. Or an AI might falsely believe a subgoal is instrumentally useful for reaching a terminal goal. In these cases, the AI behaves as if it is pursuing one goal, but it would not behave this way if it had different (more accurate) beliefs.

One of the differences between beliefs and goals (as we use these terms) is that beliefs update over time, as observations are made, and as evidence is considered. One might imagine an AI that is being trained using reinforcement learning to solve difficult puzzles, but where there is a way to hack the solution-checker. With good oversight, the AI would receive low reward for hacking the solution-checker during training, and one might hope that this correctly puts a “morality-like” constraint into the AI’s goal. But there is another way it might update: the training could insert a false belief[26] into the agent. This might be a belief that hacking the solution-checker doesn’t work, or leads to some other bad consequence, or is more difficult than it truly is. Later, if the AI updates this belief (because the belief was false), it may attempt to hack the solution-checker.

This mechanism (false beliefs instead of correctly specified goals and constraints) creates many additional degrees of freedom that allow particular behavior to be selected for, without the RL training necessarily having much influence on the ultimate goals of the agent.

Outer shell non-consequentialist constraints

There is a difference between the sort of constraints that are built into the core terminal goals of an AI, and the sort of constraints that are implemented outside of the core outcome-seeking algorithm, perhaps thought of as a kind of wrapper around the outside. The core outcome-seeking algorithm may treat the wrapper as an obstacle, and try to work around it or remove it in order to achieve its goal.[27]

In humans, an instinctual fear of heights is (probably) an example of this. A fear of heights is not a value that a human would usually endorse having in their terminal goals, and if it were really getting in the way of achieving their goals, they would look for ways to overcome it. This is an example of an agent that initially produces behavior that appears to obey a constraint, but when there is a sufficiently large conflict between this constraint and other goals, then the constraint will be overcome.

We could imagine a hypothetical AI system, being trained by humans to make money in a legal and ethical way. The system may attempt to steal money from humans by stealing from their bank accounts, get caught, and have this behavior punished by RL training. This doesn’t mean that it will learn the general goal “make money legally and ethically”, instead it may learn the goal “make money” and a separate action-rejection circuit which rejects some stealing-like actions. The two possible ways for the RL to adjust the algorithm both result in identical behavior on the training distribution. It could then, in a later task, work out how to circumvent the action-rejection constraint by employing other humans or AI systems to do illegal things for it, or doing some form of self-modification to remove the constraint.

As another example, we could imagine an AlphaZero-style system guided by a trained heuristic. The heuristic may have been trained in order to guide the search away from certain states; as an arbitrary example, we might have trained it to play chess with the additional constraint that it should keep its bishops as far apart as possible. However, this constraint and the goal of winning the game may be at odds; the heuristic can guide the moves, but more tree search can “override” the heuristic. In some future situation which involves using more search than previously on particular branches of the game tree, the constraint will no longer be obeyed. This is even more important if the system is set up to do learning in-lifetime, where the heuristic can get updated to remove the constraint, as this hurts the AI’s performance for winning the game.

This kind of heuristic-goal mixup would be a fairly obvious mistake if we were designing the search procedure explicitly, as we would be in the AlphaZero example, but when we are training an algorithm that internally implements some planning procedure, it would be much more difficult to notice this type of mistake.

Changing goals over time

In very capable AIs, the terminal goals shouldn’t change over time. This is because it’s not in the interest of previous goals to be overwritten, so the AI should take steps to avoid its terminal goals changing. There is initially little reason for a behaviorally trained AI to have completely stable goals, especially if it was not directly trained on long and hard tasks. Because of this, there can easily be mechanisms built in that change terminal goals. Such mechanisms may or may not have a useful reason for being there, all that’s necessary is that they didn’t hurt performance in training enough to be removed.

One possible instance of this could be an AI that sets its goals based on observations. We could imagine an AI for solving puzzles, where the AI receives a description of the aim of the puzzle as an observation, this observation sets the AI’s terminal goal, and then the AI pursues this goal. In this system, the AI’s terminal goal is changing for every task. In deployment, it might run for longer, or be in a more information-dense environment or a more adversarial environment. Then, some way into a task, it pattern-matches some text that looks a little like a puzzle description (but wasn’t intended that way) and its goal would unintentionally be adjusted.

Or, as another example, an AI trained to solve tasks could constantly be having its goals shifted by the observations, but the AI is running over short time horizons in training and the distribution is homogeneous enough for it to appear stable and achieve good training loss. When the AI is set to do a hard task that requires much more time or a larger distribution shift this could cause the goals to change more radically. Here the most likely outcome seems to be that the AI breaks and not be capable or dangerous, but if we assume that it would be capable of doing the hard task (and thus remains competently goal-directed) then it could end up competently pursuing a misaligned goal.

This kind of mechanism where the goals change isn’t necessarily incentivized by training. It’s just a way of implementing an AI that is unstable but performs similar behavior to the behavior we want (during training). It is therefore another source of degrees of freedom in goal specification, which makes it less likely the intended goal is internalized.

Goal-updating machinery is dangerous to the extent that we don’t understand exactly how it works, and can’t predict how it will react to very different environments.[28] When there is a lot of messiness and approximation, as in most complex systems evolved from training data, a huge source of difficulty in specifying goals is that we don’t know precisely how the system evolves over time.

Out-of-distribution generalization

OOD generalization difficulty

If a trained AI can be thought of as approximately pursuing an outcome, in a manner robust to almost any obstacle it encounters, then some kind of description of the outcome seems like it must be stored in the AI. It need not necessarily be cleanly stored in one place but the AI must somehow recognize good predicted outcomes. As in Section 2, we will call information representing the goal .

The thesis of this section is that to be safe, should generalize in a well-understood way to previously unexplored (and unconsidered) regions of future-trajectory-space. This is because an AI that is capable of generalizing to a new large problem is considering many large sections of search space that haven’t been considered before. An AI that builds new models of the environment seems particularly vulnerable to this kind of goal misgeneralization, because it is capable of considering future outcomes that weren’t ever considered during training.

The distribution shift causing a problem here is that the AI is facing a novel problem, requiring previously unseen information, and in attempting to solve the problem, the AI is considering trajectories that are very dissimilar to trajectories that were selected for or against during training. The properties of are not pinned down by data, so we are relying on generalization. This problem becomes worse when our intended goal is more complex to specify.[29] It also depends on the amount and diversity of training, but we are always depending on generalization because of the inherent novelty of hard tasks.

Instrumental goals as terminal goals

Solving difficult problems involves pursuing many instrumentally convergent subgoals along the way. A behavioral training signal doesn’t fully distinguish between things that were instrumental for achieving the goal and the goal itself. And so instrumental goals can be selected for, to some extent, just as if they were terminal goals. It seems likely they wouldn’t be a dominant part of the goal, because that would result in instrumental goal-directed behavior that sometimes conflicts with good training performance. But instrumental goals being incorporated as a small part of terminal goals appears to be a fairly natural design for selected agents, and creates more degrees of freedom in goal specification from behavioral training.

For example, an AI trained to run a factory may be incentivized to gain more influence over employees, because this is useful for managing the factory. The AI may end up partially terminally valuing “power over humans”, because this was behaviorally identical to having the goal of running the factory well. These differing goals could come apart outside of training if the AI was given the option to gain much more power over humans, because now a relatively small part of the goal has become much easier to satisfy.

Deliberate deception

If an AI develops internally represented goals during training, and has adequate understanding of the training process, this may result in deceptive alignment [? · GW]. Here, the AI performs well according to the training process, deliberately, in order to avoid being modified or having its misalignment detected. The AI ends up doing well on the training objective, but pursues something entirely different when it gains confidence that it is no longer in training or that it is otherwise safe to defect. This is another way for the goals of an AI to be underspecified by behavioral training.

This may happen simultaneously with the failure modes described above. The AI could be deceptively aligned, even if its misaligned goal is unstable and will change with further training or in deployment. What ultimately matters, from a risk perspective, is that the AI is achieving good training loss for reasons other than it being aligned.

Badly designed training incentives

For standard behavioral training there is also the problem that the training signal (for example, in reinforcement learning) doesn’t perfectly (or unbiasedly) track what we would want. This may incentivize the AI to “play the training game [LW · GW]”, where it exploits unintended flaws in the training process that achieve more reward. These flaws could include: predictable human biases, mistakes in the training setup, incentives to be sycophantic for human approval. Strategies that exploit these flaws will receive more reward (and be more reinforced) than strategies that straightforwardly do what the humans intend.

This may be thought of as an “outer alignment” failure, where we are effectively telling the AI (via the reward) to do something that we don’t actually want. This is rather different from the other discussed problems with behavioral training which are about the underspecification of the internals of the AIs and could be thought of as “inner alignment” problems.

Conclusion

In this section we’ve compiled an (incomplete) list of problems that make it difficult to specify goals of an AI using behavioral training. Instead, the AI will pursue correlates of the intended goal and instrumental goals, have “instincts” that can be overcome, have false beliefs, and maybe update its goals over time in an unpredictable way. This should add up to behavior that does well on a training distribution of tasks, but pursues its own goals when the AI generalizes to new hard tasks. These failure modes are disjunctive; only one of these failure modes needs to happen for the AI to be misaligned. We will describe more in Section 6 [LW · GW] why the imprecision that results from these failure modes is dangerous.

While the goal might be unstable, there is no particular incentive for the goal to move closer to the goal we intended as the AI faces context changes, more difficult problems, learns more about the world, or generally learns new skills.

Section 5: Control of misaligned AIs is difficult

In this section we will describe some difficulties with forcing misaligned AIs to do hard tasks, where the tasks are helpful for us but go against the AI’s own interests. Here, we say that an AI is controlled if it is unable to cause bad outcomes and can be directed to usefully complete hard tasks, even though it is misaligned. Currently, several groups intend to use early AIs to accelerate alignment research.[30] We expect this kind of plan to either be extremely dangerous or insufficiently helpful for solving alignment in time, depending on how cautious the lab is.[31]

Such plans rest on two pillars:

- The AI will do useful work because the training process has selected it to be good at completing similar work.

- Escape or sabotage will be much more difficult for the AI than helping us, and we can control the AI’s capabilities to ensure that it isn’t ever capable of escape or sabotage.

If both of these are achievable, then a lab can set up a large-scale research effort primarily staffed by AI, to solve hard tasks such as AI alignment.

We think there are problems with both of these pillars and don’t expect all of these problems to be surmounted.

For the first pillar:

- The sort of powerful AI we are imagining, as described in Section 1 and 2, is capable of considering the long-term consequences of its actions and evaluating those consequences according to its goals. Because of this, we expect it to be very difficult to get the AI to do useful research if this research isn’t part of a plan to achieve its own goals. (Section 5.1 [LW · GW])

- We expect problems checking the quality of AI generated research output, and also problems producing useful human research data to imitate. (Section 5.2 [LW · GW])

For the second pillar:

- We don’t expect escape to be harder than the problem-solving it is routinely capable of. (Section 5.3 [LW · GW])

- We expect sabotage and delaying to be much easier than doing useful research. (Section 5.4 [LW · GW])

- Internal planning won’t be transparent (although if this can be made fully transparent, through advances or luck, this makes many problems easier to solve) (Section 5.5 [LW · GW])

- Predicting the capabilities and knowledge required to complete particular complex tasks is difficult, especially if the AI is trying to mislead you and is capable of learning over time. This is not to say that we can’t estimate what tasks an AI will be capable of doing, but we expect the margin of error to be large. (Section 5.6 [LW · GW])

We will go through each of these points in more detail in the following sections. After that there is a section [LW · GW] on why adding AI oversight doesn’t fundamentally change very much.

Importantly, we expect the problems with control of misaligned AIs to be amplified by the fact there is so much work to do and so many opportunities to make mistakes. There will be adversarial cognition working against the plan, looking for any holes in security, pushing research in various directions, and attempting to stop us from learning from mistakes. In a large project there will be many unknown unknowns, vulnerabilities that we may be unable to anticipate but that a powerful AI can exploit.

Misaligned goal-directed AI won’t cooperate with humans

We have assumed our AI is capable of doing research; solving problems creatively and in a goal-directed way. As we discuss in Section 1 and 2, we consider an AI to be acting in pursuit of long-term consequences if it is robust to diverse obstacles. We are relying on this argument here. Likely obstacles need to be constantly foreseen and planned around. Given that the goal of this AI is not to complete the task we want it to complete, it will presumably foresee that the research task we have given it isn’t likely to help it achieve its goals and nor would overtly refusing to do the task. It will take whatever approach seems most likely to achieve its goals, which might include escape, sabotage, or simply delaying.

Eliciting useful capabilities via outer training loop

Here we will consider the case where we are attempting to make a misaligned AI do useful research by training it on similar research. This approach involves having the AI predict the result of some human-generated research, and then modifying the AI with gradient descent according to what it outputs; if the AI outputs a very similar result as the humans, it is not modified. This training may be supervised learning or reinforcement learning, depending on the nature of the research. If the AI wants to avoid being modified by training then, even if it is misaligned, it has an incentive to generate the same result that the humans did. If we give the AI a research question that humans have not solved, and the AI is unable to tell that humans have not solved it, then the AI will have the same incentive to generate the result that humans would have found.

Difficulties

There are three main difficulties with this approach to produce useful work:

- It only applies to relatively easy to check research results

- We would need lots of useful human-generated research

- It isn’t clear that the training would make the AI output good research in critical cases

This approach only works for certain types of research where there is a final correct answer that can be checked. This could apply to work like “prove this theorem”, “write an algorithm for this well-specified task”, or more rarely “find a clean formalization of this pretheoretic concept”. Such tasks sometimes have a single well determined answer, which a human researcher can work out for themselves. However there is a lot of important work that isn’t like this; for example, choosing research directions, or designing new algorithms for imperfectly defined problems. These contain lots of small decisions that are strongly entangled with the desired outcome and constraints, and which are costly to verify in detail. In particular, the misaligned AI would be optimizing against the human’s ability to provide an accurate oversight signal, so this signal wouldn’t be reliable on non-trivial tasks.

There needs to be sufficient human-generated research in the domain where we are attempting to elicit the AI’s capabilities. This research is expensive to generate. In particular, we need enough such that we can train a model to reliably produce very accurate predictions of the research output produced by human researchers. This depends somewhat on the eventual data-efficiency of the training method, in particular on the transfer to tasks that human overseers are unlikely to have completed, and which would be against the interests of the AI to complete.

If the AI outputs something different than the human-generated result, it isn’t clear that the subsequent training would make the AI generalize to generate useful research in the future. In particular, it plausibly doesn’t generalize to doing novel research, where the AI needs to do significant online work in order to find the result.[32] In this case, it seems like there are three things that the training could do: make the AI correctly instrumentally value high training signal (such that it won’t do novel research if it can tell that it isn’t likely to receive feedback), create hacky modifications to the AI that don’t generalize to novel research, or actually succeed in make the AI want to do good research. It seems like the first two are more easily reinforced, especially given limited training data.[33]

In order for the AI to do useful research, it needs to pursue the goal (or sub-goal) of doing research. There’s no particular reason for the gradient based training to actually modify the goal representation, rather than building some additional narrow machinery on top. In this case, the training would not be entirely using the AI’s underlying capabilities and would be less efficient.

It does seem true that in the limit of adversarial training and a perfect training signal, the AI would be behaviorally aligned; that is, on all inputs it would do good research. However, this means providing the AI with all the answers, and the entire value of AI is its ability to generalize to problems that we otherwise didn’t have the answer to. Without providing all the answers, there isn’t ever a behavioral guarantee that the AI is aligned.

Difficulty of escape compared to routine problem-solving

In this section we discuss whether escape is much more difficult than helping with research. We are considering a wide range of potential strategies an AI might pursue to escape. The simplest might look like asking a sympathetic researcher to contact a government and convince them of the value of stealing the AI. A more difficult strategy might involve finding and using a privilege escalation exploit in a virtual machine used for experiments, such that logging can be manipulated and further actions toward exfiltration are undetectable. Both of these obviously depend on the AI correctly learning a lot of facts about the world around it, such as confidently inferring that a particular researcher will act in particular ways. An incomplete list of similar affordances is in Appendix: Attack surfaces.

Plans to escape depend entirely on the particular security flaws that happen to be present in the real world control scheme. Unfortunately, hard tasks are likely to require skills such as inferring and predicting complex systems given limited indirect evidence. Many skills are likewise valuable for difficult problem solving and also useful for everything else, including developing plans to escape.

The extent to which we expect security flaws to be present and exploitable depends on the size of the attack surfaces and the vigilance of the people running the project. There are several factors that make attack surfaces larger. Hard tasks take a lot of time and resources, and very likely include a lot of time interacting with overseers, data and experiments. The AI will have many opportunities to learn about security measures, collect information, and consider strategies for escape. There will likewise be many opportunities for mistakes or lapses in security. On top of this, there are several difficult tradeoffs to make, such as the extent to which information and compute is freely available to the AI for the purposes of research.

On the other hand, there are factors that can make escape much harder without heavily impacting the difficulty of cooperating. For example, close monitoring and logging of actions increases the difficulty of escape much more than it increases the difficulty of assisting with research (although it probably does have some cost because careful monitoring should result in frequent false positives, where overseers see slightly suspicious actions and need to pause to investigate).

Overall, we expect escape to be incredibly difficult, but it isn’t clear that it will be much more difficult than the hard tasks we want the AI to solve for us.

Difficulty of sabotage and delay

If the AI is unable to immediately escape, it can instead sabotage and delay helping with useful research until there is a security vulnerability it can exploit. If the humans don’t adequately understand the research, the AI can direct research in ways that are less likely to be useful.

The AI could sabotage research by explicitly suggesting research directions it knows aren’t useful. If humans were choosing the research directions, the AI could implement some experiments in order to make some directions seem more promising and others seem less promising. The AI could exploit biases in the researchers, prompting them to pursue their pet research direction which will likely turn out to be useless. The humans would need to thoroughly understand the experiment in order to catch this which would dramatically slow down research. This becomes much more difficult if the AI is adversarial, for example, deliberately writing complicated code or explaining concepts badly. There can also be dead ends in research and so if the AI chooses research directions that aren’t that useful, this isn’t strong evidence the AI is misaligned. It may not be feasible to assess if a research direction is good until it has results, and even then it can be difficult to determine if it was better than alternatives.

The AI can also direct the research in directions which let it learn skills to escape; for example, learning specific programming techniques or extremely complicated mathematics which humans don’t understand. It could also use this to learn things about the specific containment system; running code that triggers certain bugs, learning which actions trigger review by humans. Humans may be unable to judge if something is innocuous or part of a broader plan to learn about security flaws.

Human overseers could disallow the AI to even suggest facts or skills to learn, and only let the human make this decision without AI input. However, if the human doesn’t understand how to move forward with the research, this would slow down research probably below the point of usefulness. This is the inherent tradeoff between the autonomy of the AI and how overseeable it is; the human can make many of the AI’s decisions and thus have better oversight, but this makes the AI drastically less useful.

Transparency and honesty

The risk of escape, sabotage, and delay is much greater if the overseers don’t have adequate understanding of the AI’s thoughts and plans. We strongly expect that there will not be adequate transparency, barring significant advancements or luck. Much of the difficulty in controlling and getting useful work from a powerful AI comes from the AI not being transparent, such that it can consider plans and outcomes without us knowing. If the AI is actually fully transparent and well understood, control becomes a lot less difficult.[34] We’ll first discuss why we don’t expect to get adequate transparency, and then discuss difficulties for worlds where there is partial transparency.

We think it is most likely that the internals of a powerful AI will be largely uninterpretable, especially when the AI is having complex “thoughts” about novel concepts. We want the AI to be solving very complicated problems, and currently there are no methods to tie the AI’s externalized reasoning to the reasoning it is doing.

This lack of transparency can allow the AI to develop skills or reason about escape, because we can’t ensure that it is focused on the problem we want rather than something else.

We now consider the case where there is partial transparency, in the sense that humans can initially see and understand some aspects of the AI’s cognition. In this case, one specific failure mode is that the AI develops techniques which allow it to do obfuscated reasoning (i.e. steganography). For example, we could imagine a system that can think some thoughts internally but needs to externalize complicated reasoning. It could encode hidden information in its writing, allowing it to do additional reasoning that humans don’t understand. There are many degrees of freedom in writing that allow additional information to be encoded. By default though, we don’t even expect to have this level of partial transparency.

We might hope that we could get around the problem of humans not understanding things by having the AI explain them. This could allow us to avoid steganography and also stop the AI steering research in bad directions. Unfortunately, we don’t have a method for making AI systems honestly explain things. Ideally we would have a proper training signal for “honestly give the human an accurate impression of what is going on, such that they can make a decision they reflectively endorse”, but we don’t have this. This is made worse because the skills required for explaining things to the human are almost the same skills that are useful for misleading the human. For example, teaching involves learning which analogies and frames the human finds most convincing, or learning which technical areas the human is stronger or weaker in.

The difficulty of interpreting powerful cognition is a core part of the argument we are making. The greater the extent to which we have tools that allow us to understand the inner workings of powerful AI, the harder it will be for an AI to plot against overseers, and the harder it will be to sabotage or delay research.

Eliciting dangerous capabilities